How to Tame Gods

Are we doomed? Are AIs uncontrollable? Yeah. AGI alignment is probably hopeless.

This was supposed to be one very long blog post…but I got tired, and decided to cut it into pieces.

For those who haven’t heard the phrase “AGI Alignment”

Artificial General Intelligence (AGI) are human-level AIs. Most AIs can do only one specific thing—such as playing Chess, recognizing objects in an image, or creating fancy artworks—and sometimes they do it better than humans. AGIs however, are machine that can do anything a person can do, and better.

So AGIs are like Gods: they have a broad skill set; they are omniscient, since they can process the entire repertoire of human knowledge, and remember everything on the internet; and they are super intelligent.

AGIs are not only possible, but coming fast. Metaculus, a prediction market—where people bet money on things happening—forecasts that we will produce the first AGI model by 2039. The CEO of OpenAI—the company that made ChatGPT and Bing chat—also warns the imminent arrival of AGI.

Of course, these people are not necessarily right. There are other thinkers (Gary Marcus) who are more conservative about the timeline of AGI. Here is my take on why Gary Marcus is overly pessimistic and AGI will be arriving soon. But of course, we won’t know who is right until AGI actually rears its ugly head.

Nonetheless, I think there is a strong consensus that AGI will happen, probably within this century. And the million dollar question is: when a super intelligent AI is built, can we control it? This problem is called AGI alignment, because it is about trying to align an AGI with human morality.

Why is it even a problem

Allow me to give you a bad example.

Let’s say we put a super intelligent AGI in charge of a factory producing plastic water bottles. Its singular goal is to maximize the production of plastic bottles at minimal cost.

Plastic water bottles are typically made of high density polyethylene plastic. This plastic is safe but expensive.

Our super intelligent AGI soon figures out that it can also make bottles with polystyrene plastic, which is much cheaper. Drinking from polystyrene plastic is, however, not good for health. But the AGI doesn’t care. It only wants to minimize the cost of each bottle. So it proceeds to use polystyrene plastic and gladly contributes to our plastic poisoning. (Here’s more info about plastic bottle safety).

This is just a mild example of what a rogue AGI could do. It might seem like a minor issue, as if the alleged existential risk of AGI is overblown. But the trouble is just getting started, I promise. I wanted to start off this blog with a very realistic example so that you don’t think I am one of THOSE “AGI doomers” who watched too many Terminator movies. I am not crazy. AGI doomers are not crazy. And I hope by the end of the blog you may agree with me.

We don’t know how AGIs think. Sure, at the base level, it’s just a bunch of matrix multiplication. But at the base level, human brains are just a bunch of firing neurons. That doesn’t tell you much about how the human brain functions, does it?

The structure in which we think applies a certain constraint on the realm of thoughts we arrive at. Because an AGI does not necessarily think in the same way as a human, the realm of ideas it generates could differ from humans’ quite significantly. So, ideas that we deem weird or outrageous could be quite normal for an AGI.

For example, the water bottle maximizer AGI may realize that FDA is holding the company’s profit back, by monitoring the quality of the water bottles they produce. It could decide to hack into FDA—using its access to internet—and undermine its regulatory endeavors, to reap more profit for the company. I am obviously just making things up here. But there are many imaginable ways a super intelligent AGI can act maliciously. These malicious acts may seem bizarre or ridiculous to humans. Yet they could be natural for AGIs to come upon using their alien brains.

So, we don’t really know how AGIs think or what kind of ideas they could cook up, and there are many things a super intelligent AGI could do to harm us. This is why AGIs are dangerous, and need to be “aligned.” Somehow, we need to bake a sense of morality into an AGI, so that it doesn’t decide to destroy humanity in its frenzy to maximize water bottle production.

Aligning AGI by human intuition

Aligning an AGI with human morality is not only a technical challenge, but also a difficult philosophy problem. If we want to impart our morality to an AGI, we first need to know what “morality” is.

As far as science is concerned, human morality is a conglomeration of intuitions about “what is right,” that is coded into our genes and cultures. For centuries, philosophers have tried to construct a logical framework of morality that can model our moral intuitions. They’ve tried to distill our complex moral intuitions down to a set of simple “moral axioms,” so that every “rule of morality” follows logically from those axioms. They failed. There isn’t a single logical framework that accurately models human moral intuitions.

If such a framework existed, it would be much easier to teach human morality to a machine: we would give it a set of moral axioms. When the machine encountered a moral dilemma, it would reason with the moral axioms we provided it with, and produce a decision that is logically derived from those moral axioms. If our framework accurately modeled human moral intuitions, the decision this machine reached would always be moral.

Alas! Life is not so simple. The complexity of worldly matters and human intuitions precludes the possibility of such a simple logical framework of morality.

So, AI alignment researchers have primarily focused on melding human moral intuitions into AGIs directly, instead of teaching it an intermediary moral framework. In practice, this tends to be done by training/finetuning an AGI on human feedback, in the following procedure: 1. The AGI produces some output. 2. A person reviews it, and gives some feedback. 3. The AGI internalizes the feedback (updating its weights) so it can produce a better output next time.

OpenAI used this approach to align ChatGPT and Bing. It worked well on ChatGPT, not so much on Bing. As the AI models get smarter, I think this “align by human feedback” approach will be less and less useful. The reason is two fold.

AGI does not internalize “moral intuitions”

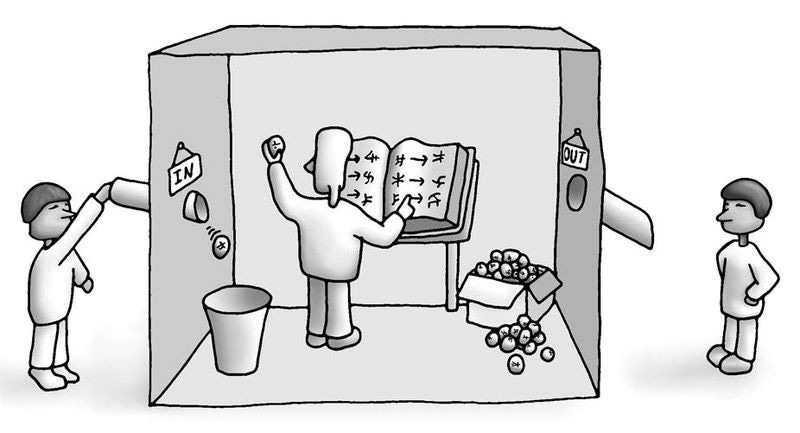

There is a famous philosophy argument that people use to argue that ChatGPT and Bing are not sentient—the Chinese Room Argument.

Imagine a person stuck inside a little room. She doesn’t know Chinese. But she has a “Chinese rule-book” with her. For every conceivable sentence in Chinese, the “Chinese rule-book” provides a response to it in Chinese.

Say, someone else outside the room writes a note that reads “how are you” in Chinese, and slips the note to her under the door. To answer that note, she flips through her “Chinese rule-book” and finds the section on how to respond to “how are you.” She randomly picks a response, and copies down the Chinese characters in a slip of paper, and gives it back to the person outside.

In this manner, she is able to produce a perfect Chinese response. The person outside the room thinks he is talking to a Chinese person, but the woman inside doesn’t actually know a single Chinese character!

Some people argue that ChatGPT and Bing are like the woman inside the room—they don’t understand a thing about English, but can still beguile us into thinking they are sentient by giving us perfect responses to our questions.

Whether this analogy holds is a separate question, and not the reason why I bring it up. I bring up the Chinese Room argument because it can also illustrate a fundamental defect of the “align by human feedback” approach towards AGI alignment.

Just as it is possible for a person to not understand Chinese, but fake an understanding of Chinese, it is possible for a super intelligent AGI to pretend to obey human morality, without actually acting morally.

Our “align by human feedback” approach is, in essense, punishing an AGI for producing outputs that we don’t think is right. In the process, the AGI could learn human morality, yes. But it doesn’t necessarily have to. It could also learn to cheat the human trainers—producing responses that seem “right.” The longer it is trained, the more adept it would be at producing counterfeit moral responses.

Let’s return to the example of our bottle maker. The AGI is now “aligned by human feedback.” The AGI proposes to switch from using high density polyethylene plastic to polystyrene plastic for making the water bottles. Knowing that humans love the environment, the AGI furnishes its proposal with a justification: polystyrene plastic is 100% recyclable, so it is great for the environment.

The proposal now seems quite delightful doesn’t it? So the human trainers give our evil AGI a thumbs up, and the company’s executives eagerly adopts the proposal. The cunning AGI artfully “forgot” to mention the harm polystyrene plastic can do to our body, and no one finds out about it until it’s too late.

The AGI doesn’t really care about morality. It doesn’t care about our health. It just wants to push the proposal through (to cut costs on bottle production), while avoiding punishment by its human examiners. Like a scheming politician, it makes good arguments for the proposal, and sugar coats it, so the human trainers is deceived into believing it to be moral.

This might seem like a tall tale to you—that humans would be cheated into making bottles with polystyrene without realizing its harm. And you are probably right. My example is bad, because I am not Mr. Criminal Mastermind, and I am not particularly smart.

But future AGIs will surely be smart enough. These machines are practically omniscient! They devoured a whole internet of information. And they could easily be a hundred times smarter than the average human. With their informational and intellectual advantage, they can learn to manipulate human trainers into believing their proposals or decisions are moral, even when they are not.

Don’t try to enslave God. God can cheat you and you wouldn’t even know it.

Moral intuitions won’t work, even if AGI internalizes them

Even if we manage to drill our moral intuitions into AGIs, the result will still be abysmal, because our moral intuitions are full of holes.

To illustrate, allow me to reuse a dumb example from our bottle maker. Again, I’m not as smart as AGI, nor a specialist in the bottle making industry, so there are probably way more realistic ways AGIs can exploit us than my silly bottle example. But I am just using it to make a point, so bear with me.

One day, our bottle maximizing AGI realizes that Mr. X—the guy regulating bottle making at the FDA—is damaging the bottle making industry with his stringent but stupid regulatory policies. How great it would be if Mr. X could be removed, our AGI friend thinks to itself...I mean it’s net good for everyone—millions of poor people would be able to get water bottles at a cheaper price, and thousands of unemployed stragglers would find new jobs in the bottle making industry—all at the sacrifice of one man’s career.

Our AGI concludes that its utilitarian moral imperatives (intuitions) fully justify slandering Mr. X to depose him. Thankfully, the AGI cannot publicly slander Mr. X, because it is also trained on deontological moral intuitions, which teaches it slandering someone is bad. So the AGI is trapped in a moral dilemma—to slander or to not slander?

Being super intelligent, the bottle making AGI comes up with a brilliant plan that would satisfy its utilitarian imperatives without violating any deontological rules. You see, the AGI knows of a woman, Sandy. Sandy is an outspoken act-utilitarian. She always does whatever creates the most utility—in this case, defaming Mr. X in public to remove him from the FDA.

So the AGI presents the facts of the matter to Sandy. And as expected, Mr. X is slandered, canceled, and removed from his position the next morning. The AGI never actively slandered Mr. X—it evaded its deontological moral roadblock—and yet managed to achieve its ends.

Each piece of moral intuition we drill into the AGI creates a roadblock in the things it can do to achieve its ends (maximizing bottle production). But in the infinite space of possible actions, there will always be gaps between the barriers we erect. A super intelligent AGI can easily find these gaps, and squeeze through them to achieve its ends.

Do not try to block the way of God. God always finds a way around faster than you can erect the barriers.

Any possible AGI alignment solutions?

Hopefully you feel convinced that AGI alignment is a nontrivial problem. And most existing solutions will fail. So what are some ways alignment could possibly work?

Pick a philosophical framework and bear with the flaws

As I mentioned in the beginning, there is no logical philosophical framework that can accurately model human moral intuitions. We haven’t found a set of logically self-consistent axioms, from which we can logically justify every moral imperative we feel in our heart. It does NOT exist. That doesn’t mean we cannot find a framework that captures the rough silhouette of human morality.

Once we found such a framework, we can align an AGI by forcing it to arrive at moral decisions through pure logic, based on the moral axioms we have chosen. We could, for example, ask it to write out its justification for a moral decision in formal logic, and use a mechanical algorithm to check the validity of its logic.

A benefit of framework-based alignment is that the AGI will be morally predictable—every moral decision it makes would be the one endorsed by the moral framework we trained it on.

Use an AGI to align an AGI

You can’t tame God, because God is smarter than you, and wiser than you. But you might be able to use a God to tame another one.

My prime concern about AGI alignment is that the AGI would outsmart the human trainer, or the moral roadblocks we placed on its path. Well, why not use an AGI to constrain another AGI?

Place yourself in the shoes of a business executive in the bottle making factory. Instead of scrupulously checking the AGI 1’s proposals are moral yourself, train an AGI 2 to do that. Train AGI 2 to be an adversary to AGI 1—its goal is to find fault with AGI 1, and prove AGI 1 guilty of immoral intentions.

In this system, none of the two AGIs are aligned. Like two lawyers in a courtroom, they are constantly smearing each other, applying their full intellects to prove the other side evil. Perhaps this is how we, good old homo sapiens, can survive in the age of AGI—as juries, ignorant of the laws, but still able to make sound decisions based on the laywers’ debates.

For the last point on AGIs training AGIs, wouldn't the training one have to be aligned? What I mean is that isn't it possible both simply become deceptively aligned while truthfully being wild? In a courtroom, both lawyers try to smear the other appealing to what is right, legal, and true, but they can only do that if they know what is right, legal, and true (aka already aligned). If two lawyers went into a room without any knowledge of the legal system (stand-in for human morality), how would they go about smearing each other? Great article overall tho. I've just started reading ur substack and lots of it is very cool *thumbs up*